Published for the first time in El País

Do you know the Twitter account New York Times? It’s an account created by Max Bittker that tweets words that are published for the first time in the history of the New York Times.

pretentiousless

— New New York Times (@NYT_first_said) July 7, 2023

The project has always struck me as a marvel. A few months ago I set myself the challenge of doing the same with the spanish newspaper El País. The result is the Twitter account First time in El País. The project has been more complex than I thought at the beginning, but I was able to solve the technical problems that I encountered. Next, I explain how the NYT bot works and how the one I created for El País works.

How does the New York Times bot work?

As Bittker explains in the project’s Github repository and in the project’s web, the process consists of the following steps:

- Scrape the articles from the front page of the digital edition of the newspaper. Tokenize the text of the articles. That is, separate the text by words.

- Filter out words that are not relevant such as proper names, numbers, special characters, URLs, social network user names, etc….

- The words that remain after the first filter, are first contrasted with a set of previously published words, and finally with the official NYT API with which you can search the database of more than 13 million articles published by the newspaper since 1851.

- If there is any word that passes the above filters a python script publishes on Twitter the word.

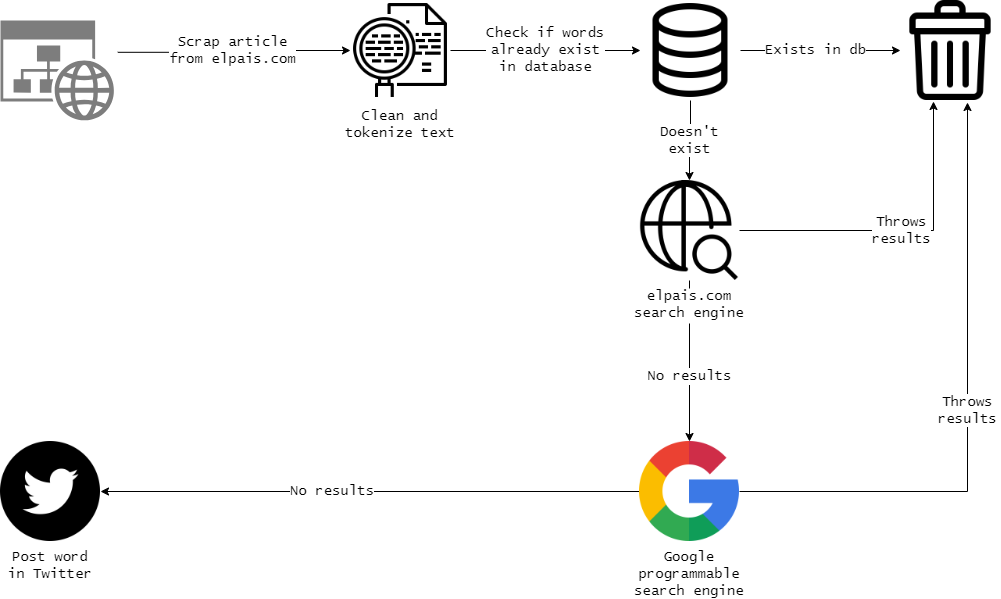

How does Primera vez work in El País?

As the creator acknowledges, the most important filter to know if a word is newly published is the NYT API. El País, unfortunately, does not have a similar public API, and therefore this poses a considerable obstacle when it comes to imitating the project with the Spanish newspaper.

It is true that, in exchange for not having an API service that would make my life easier, what El País does have is a newspaper archive with all its digitized articles dating back to its founding in 1974. Not being a newspaper as long-lived as the NYT, it is possible to scrape its newspaper library, and store all its articles (or the vast majority) in a database with which to check the words that are published daily.

Therefore, the first step was to scrape all possible articles from this newspaper library, tokenize the texts and store the unique words in a SQL database. The result is a table with about 310 unique words.

The reason why I found it more convenient to store the single words in a table instead of the complete texts has to do with query optimization. A query to find out if a given word has previously appeared in a set of hundreds of thousands of headlines and news bodies can take several minutes even if the table has a full text index. However, a query on a single-column table containing just over 310,000 single words (and not long text in each record) will take just a few seconds and yields an equally or more accurate result. If we consider that around 8,000 unique words are published in El País every day, it is perfectly understandable that the difference between a few seconds and a few minutes is fundamental.

This set of unique words (as I said, more than 300,000) is the main instance with which I can discard the bulk of the words that are published every day in the newspaper. However, after testing I realized that it is far from infallible. Dozens of words passed this filter but could then be found in El País using Google or its own search engine. The next step, then, was to make use of these two search engines.

Google’s problem is that to use its search engine through a programming language you have to use an API that is paid but that allows you to do, for free, 100 searches per day. Therefore, I thought that this filter could be the last step of the process. Before reaching that step, I decided to make a script in R that would navigate to the El País search engine and perform searches for the words that had passed the first filter. This filter is quite accurate, since it is searching its own database, but it is somewhat slow and sometimes gives errors. These errors end up meaning that very few words reach the final step (about a dozen) but some of them may have been published previously. To finish polishing the process, I make use of the Google API by relying on search parameters to make sure that the results are from the domain of elpais.com and that the term is found exactly in the result.

If there are words that pass these three filters, they are stored in a database table. Throughout the day, another script checks if there are words in that table, selects one of them and publishes a tweet on the ElPaisFirstSaid account and another on the ElPaisSaidWhere account with the context and the link to the article. After doing this, the script removes the tweeted word from the table of unpublished words and adds it to the table of already published words. This task is executed every few minutes through a cron job.

The final result is neither exhaustive nor 100% accurate. It still sneaks in some uninteresting words such as terms in other languages or alphabets, verbs conjugated with enclitic pronouns (e.g.: bring it closer to me), or typos (although the latter, as the creator of the original project says, is part of the charm of the bot). Despite this, I recognize that the result is very satisfactory and I have enjoyed creating it because it has forced me to deepen my knowledge on various topics such as databases, APIs or regular expressions (regex).

queeritud

— Primera vez en El País (@ElPaisFirstSaid) August 30, 2023

As I always like to do, all the code can be found in their Github repository.